This article follows « finding an usual cause of max_user_connections ».

After debugging a max_user_connections and a cache expiration problem in the customer app, we still experienced stalls of the mysqld process. MySQL refused all connections for 5 to 20 seconds, with no obvious reason. I’ll try to explain what I did to solve it.

Go on, sherlock

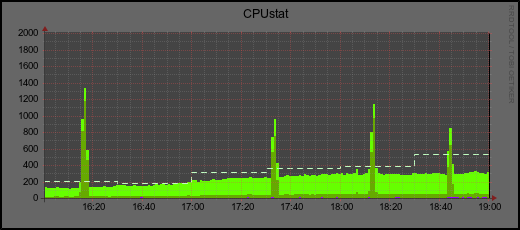

CPU usage was the following :

Legend: light green is user usage ; dark green is system usage ; violet is IOwait. Y-axis is thread usage (one thread at 100% == 100 in the graph).

Some useful informations on this image :

– there is no visible pattern on when the problem is happening (confirmed with a graph on 2 days).

– CPU usage is increasing for a minute …

and this usage is done by the system

I created a small bash script to get all data I needed when problem happened :

while sleep 1; do

mysql -e "SELECT 1" > /dev/null

if [ "$?" != "0" ]; then

echo "Collect !"

STAMP=$(date "+%H%M%S")

echo "Problem ! Start collecing"

# add here command line to be executed

sleep 5

else echo -ne ".\r"

fi

done ;

I first used this script to record a ‘top’ session to check which processes were eating all CPU. To get top output for 10 seconds I used the following command line :

top -b -d 1 -n 10 > $STAMP"_top" &

Remember $STAMP is the timestamp used in my bash script to group outputs.

Result ? mysqld is the top process, with linux kernel process [kworker]. Not a useful information by itself, but it definitely shows there is something wrong happening in the kernel.

How do you debug a kernel on a production server ? After checking various websites, I decided to use « oprofile » (http://oprofile.sourceforge.net). It consists of a kernel driver and a daemon for collecting sample data, and several post-profiling tools for turning data into information. To my mind, pros of this tool are :

- Sampling (compared to tools like strace)

- Can sample kernel too (compared to … strace)

- gather data in one place, and allow you to zip samples to perform the analysis you want on an other server.

Unfortunately I didn’t have oprofile on this server, so we decided to reboot the service in the middle of the night with the customer approval. The day after, we were able to sample some data.

First, let’s setup the tool with very low overhead :

opcontrol --setup --vmlinux=/root/vmlinux --event=CPU_CLK_UNHALTED:4000000

If you wondered : yes, I retrieved the uncompressed version of vmlinuz to /root/vmlinux. This is mandatory to profile kernel.

Then, let’s record a normal session to see what’s happening when everything is fine :

opcontrol --start --vmlinux=/root/vmlinux

sleep 5

opcontrol --stop

opcontrol --dump

opcontrol --save=idlesession

Top 5 of symbols were the following :

samples % symbol name

462229 99.0696 poll_idle

559 0.1198 clear_page_c

185 0.0397 find_busiest_group

139 0.0298 clflush_cache_range

114 0.0244 mutex_spin_on_owner

Everything normal here.

Note : I was not able to see symbols on the same server because of a buggy binutils library. This is a known problem that is affecting oprofile. Solution was simple : copy /var/lib/oprofile to another server and use opreport with –session-dir like this :

opreport --session-dir=/root/var/lib/oprofile --symbols session:idlesession vmlinux |head

You’ll need vmlinux binary in your current folder (for debugging vmlinux symbols. Or if you want it system-wide, just use –root. See oprofile doc for more informations).

I then modified the bash script I used earlier, and added the following :

opcontrol --start --vmlinux=/root/vmlinux

sleep 5

opcontrol --stop

opcontrol --dump

opcontrol --save=$STAMP

We waited a few hours, and collected two sessions. Results were very close so here is the output :

samples % symbol name

1000515 78.9433 poll_idle

217103 17.1300 compaction_alloc

6822 0.5383 compact_zone

5304 0.4185 spin_lock

4378 0.3454 flush_tlb_others_ipi

Very interesting ! There were many CPU cycles in compaction_alloc. What is this function ? Let’s read kernel source code mm/compaction.c around line 410 :

406 /*

407 * This is a migrate-callback that "allocates" freepages by taking pages

408 * from the isolated freelists in the block we are migrating to.

409 */

410 static struct page *compaction_alloc(struct page *migratepage,

411 unsigned long data,

412 int **result)

By experience, I know that this can be related to ‘huge pages’ functionality. Are they enabled ?

sysctl -a |fgrep vm.nr_hugepages

vm.nr_hugepages = 0

Seems not, but wait a second : what about « transparent huge pages » ? This is closely related and after some thought, may fit more for the problem we have currently. If you want to read more about « THP », this article by Jonathan Corbet is really interesting : http://lwn.net/Articles/423584/. You can also take a look at the official documentation inside kernel written by the same guy : http://lwn.net/Articles/423592/

To check if THP are enabled, do the following :

cat /sys/kernel/mm/transparent_hugepage/enabled

[always] madvise never

This was confirmed by seeing a process « khugepaged » in processlist 😉

« So, what happened ? »

This server has 128GB RAM. Memory can get very fragmented, and once in a while, the Linux Kernel tried to migrate THP to defrag memory. This process seemd too agressive in our case and resulted to stall.

« Can it be fixed ? »

Long trend solution would be making this migration less aggressive. After a few reads on Linux Kernel mailing list, I found that someone already mentioned that the current code is too drastic and can be less aggressive : https://lkml.org/lkml/2011/7/26/103

This patch is not in kernel 3.2 nor in current trunk and I do not want to maintain kernel patches in our compil process 🙂 We could also tune khugepaged behaviour with keys in /sys/kernel/mm/transparent_hugepage/khugepaged/.

But I needed a quick fix, so we disabled THP completely like this :

echo "never" > /sys/kernel/mm/transparent_hugepage/enabled

We now waited for 24 hours and no more stalls ! Problem solved \o/

Conclusion

In this process I discovered that oprofile can be a great ally when you are fighting kernel strange behaviour, or even if you want to know what MySQL is doing. I could not use gdb nor strace because it was crashing MySQL everytime (this is because this is a very big server with 64 threads and heavy load…). Oprofile gave me the results I wanted and has no impact on performance when it is not active.

Once again, solving this bug required large panel of skills and I’m very proud of it 🙂 Fortunately, 99% of problems encountered everyday are not as tough as this one.

Bonjour Olivier,

Just a quick note from Okta to thank you for publishing this article: http://www.olivierdoucet.info/blog/2012/05/19/debugging-a-mysql-stall/. We too had a problem with very high system CPU spikes and MySQL stalls. In our case they came 6 or more hours apart, and froze the database for minutes at a time. Neither Percona nor Pythian consultants could figure out the cause. After Googling your article, we set ‘transparent_hugepage=never’ and our database has been incident free for days.

Thank you too for translating your article, as none of the engineers involved in investigating the problem spoke French (and I didn’t have the patience to translate word for word ;).

Please come visit Okta if you are in San Francisco, we owe you a very nice lunch.

A bientôt,

Frédéric

Thank you Frédéric ! I’m glad my post helped you solved this problem.